Researchers have developed a groundbreaking on-chip cryptographic verification protocol for quantum computers, enabling them to validate their own computations despite hardware noise. This innovative approach, detailed in a paper published in Physical Review Letters on November 11, 2025, marks a significant advancement in the field of quantum computing. The collaboration involved teams from Sorbonne University, the University of Edinburgh, and Quantinuum.

Quantum computers harness quantum mechanical effects to perform calculations, potentially surpassing classical computers in specific tasks. However, these machines are notoriously susceptible to errors, with their output often influenced by noise. As a response, scientists have been exploring various verification protocols to ensure that quantum computations are accurate and secure. The newly proposed protocol not only verifies computations but also incorporates cryptographic security to prevent tampering by malicious actors.

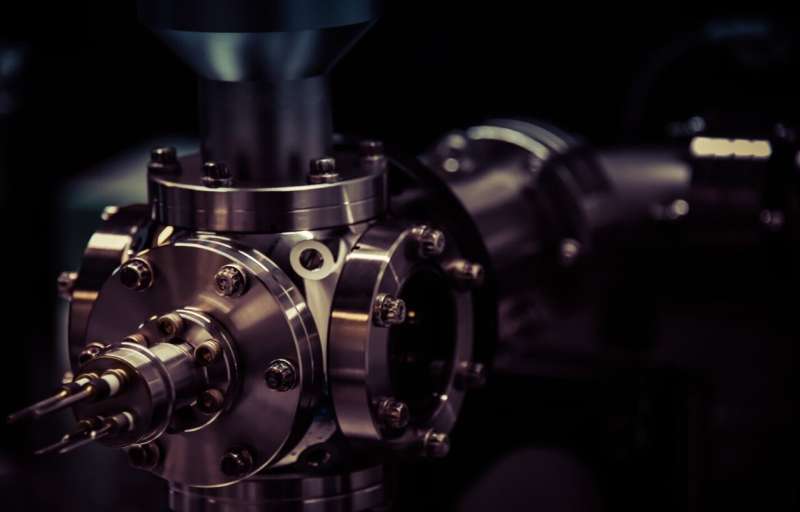

The research team, led by theoretical physicist Cica Gustiani, successfully tested the protocol on Quantinuum’s H1-1 quantum processor. “This project started as a collaboration to bridge the gap between theory and practical application,” Gustiani explained. “We had strong theoretical results and wanted to test them on real hardware.” The researchers tailored the protocol specifically for the H1-1, demonstrating impressive fidelity in gate operations and measurements.

Achieving Secure Quantum Verification

The primary goal of the team’s work was to create a verification protocol that is both cryptographically secure and suitable for current Noisy Intermediate-Scale Quantum (NISQ) systems. As quantum machines become increasingly complex, traditional methods of verification through classical simulations become impractical. Researcher Dan Mills from Quantinuum noted, “Verification is essential for our users to trust the output of these machines. We aimed to develop a practical protocol tailored to our technology.”

Previous verification solutions primarily existed as theoretical constructs and were not applicable to existing quantum processors. Gustiani clarified, “In simple terms, we aimed to enable quantum computers to prove their own reliability.” The team adapted a cryptographic verification method typically used in client-server models to function entirely on a single chip, eliminating the need for external systems.

“This allows the quantum computer to verify its computations in real-time, even under less than ideal conditions,” Gustiani added. This protocol represents one of the first on-chip verification methods, setting it apart from other approaches, such as Google’s recent Quantum Echoes experiment, which relied on separate processors to cross-check results.

Successful Testing and Future Developments

The researchers deployed their protocol on the H1-1 trapped-ion device, alternating between test runs and actual computations. Gustiani stated, “We successfully verified the largest measurement-based quantum computation to date, involving 52 entangled qubits.” This achievement underscores the feasibility of cryptographic verification techniques in current quantum computing frameworks.

Looking ahead, the team aims to refine their protocol further. They are working to enhance its compatibility with fault-tolerant architectures, addressing more complex noise models that could arise in practical applications. “We want to tighten confidence bounds to estimate the reliability of our protocol under various noise conditions,” Gustiani explained.

Mills emphasized the importance of adapting these verification techniques for future quantum processors. “As we continue to develop our machines, integrating these verification tools with error detection and correction codes presents new challenges, but we are excited about the potential.”

This research represents a pivotal step toward reliable quantum computing, providing a method for self-verification that could be crucial as quantum systems grow in size and complexity. As the field of quantum computing continues to evolve, the implications of this work may lead to more secure and trustworthy computational processes.