A recent study reveals mixed feelings among parents and children regarding the use of AI-generated images in children’s literature. While many parents express acceptance of these images if the text is authored by humans and the visuals reviewed by experts, concerns remain about the potential inaccuracies in illustrations. These inaccuracies could inadvertently lead to unsafe behaviors or distort real-world concepts.

According to Qiao Jin, the lead author of the study and an assistant professor at North Carolina State University, the research aimed to understand how AI-generated illustrations impact the reading experience for both parents and children. “We know that generative AI tools are being used to create illustrations for children’s stories, but there has been very little work on how parents and children feel about these AI-generated images,” Jin stated.

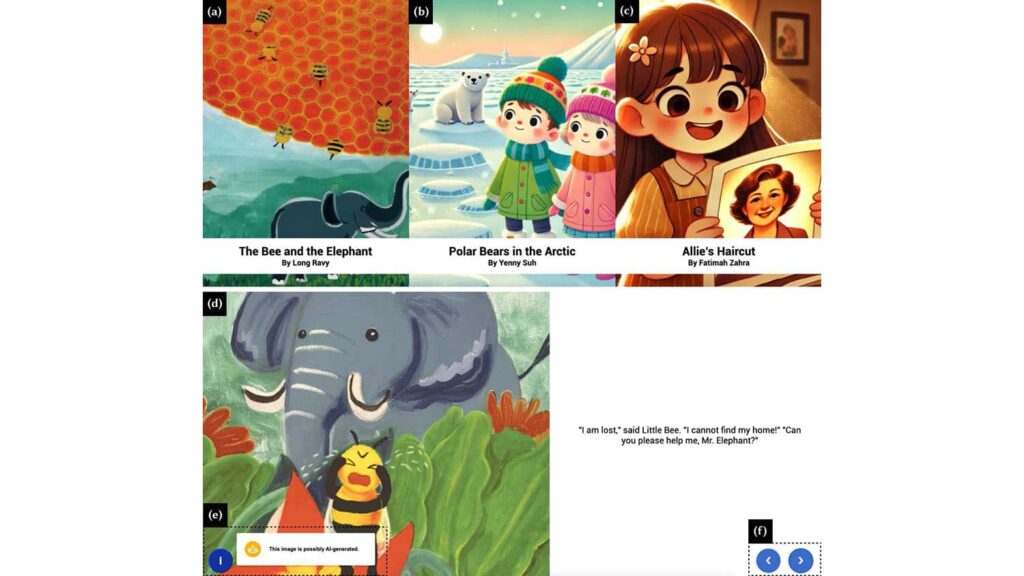

The researchers engaged 13 parent-child groups, each consisting of one child aged between 4 and 8 years and at least one parent. Every group read two out of three stories presented, which included a mix of AI-generated art, human art enhanced by AI, and traditional human-created illustrations. After each story, children rated their reading experience and the related images in age-appropriate language, while parents participated in more detailed interviews regarding their preferences and concerns.

Findings indicate that children are often more sensitive to the emotional cues in illustrations than their parents. Jin pointed out that “children were more likely to notice any disconnect between the emotions conveyed by the images and those expressed in the text.” This disconnect frequently arises from AI’s challenges in accurately interpreting emotional content.

Parental concerns varied significantly depending on the nature of the stories. For instance, parents and older children were more vigilant about real-world accuracy in realistic narratives or science-based stories compared to fables. Older children noted discrepancies in size or behavior depicted in AI-generated images, while parents expressed apprehension about errors that could promote unsafe behavior.

While the majority of parents are open to AI-generated images, provided they are vetted by experts in children’s literature, some voiced fundamental concerns about replacing human artists and the perceived “artificial” quality of AI-generated illustrations. Additionally, most parents expressed discomfort with the idea of AI generating the text of stories.

The researchers also tested the effectiveness of small labels indicating whether an image was AI-generated. Most participants did not notice these labels and some found them distracting during reading. Jin emphasized, “Parents preferred a clear notification on the cover of the story indicating whether AI was used to create the images, allowing them to make informed purchasing decisions.”

Key takeaways from the study include the necessity for straightforward cover labels to inform parents about AI involvement, the potential for specific errors in AI-generated images to create issues, and the importance of expert review for illustrations created by AI.

The findings are detailed in the paper titled, “’They all look mad with each other’: Understanding the Needs and Preferences of Children and Parents in AI-Generated Images for Stories,” published in the International Journal of Child-Computer Interaction. The corresponding author of the paper is Irene Ye Yuan of McMaster University. This research was supported by the OpenAI Researcher Access Program.