A new artificial intelligence model, named VSSFlow, has been developed by researchers from Apple and Renmin University of China, enabling the generation of sound and speech from silent videos in a single, cohesive system. This innovative model promises to enhance the way audio is created for video content, addressing significant limitations in current technology.

Addressing Limitations in Video-to-Sound Technology

Currently, many video-to-sound models struggle with generating coherent speech from silent videos, while text-to-speech systems are often unable to produce realistic non-speech sounds. Previous attempts to combine these functions typically assumed that joint training would reduce overall performance. This led to separate training processes for sound and speech, complicating the development pipeline.

To tackle these challenges, the team of researchers created VSSFlow, which integrates both sound effects and speech generation. Remarkably, the architecture allows for mutual enhancement between speech and sound training, leading to improved outcomes for both tasks rather than competition.

How VSSFlow Works

VSSFlow employs a series of advanced generative AI techniques. It converts transcripts into phoneme sequences and utilizes flow-matching to reconstruct sound from noise. This approach trains the model to effectively generate audio from random noise, embedding its functionality in a sophisticated 10-layer architecture that directly incorporates video and transcript signals into audio generation.

During the training phase, the researchers exposed VSSFlow to a variety of data sources. These included silent videos paired with environmental sounds, silent talking videos with transcripts, and text-to-speech data. Through this end-to-end training, the model learned to produce both sound effects and spoken dialogue concurrently.

Despite its initial limitations, VSSFlow was further optimized by fine-tuning on a substantial dataset containing examples where speech and environmental sounds were intermingled. This adjustment enabled the model to understand and generate both elements in unison.

VSSFlow begins its audio generation process from random noise, sampling visual cues from the video at ten frames per second to shape the ambient sound. Meanwhile, transcripts provide context for the speech, ensuring a cohesive audio output.

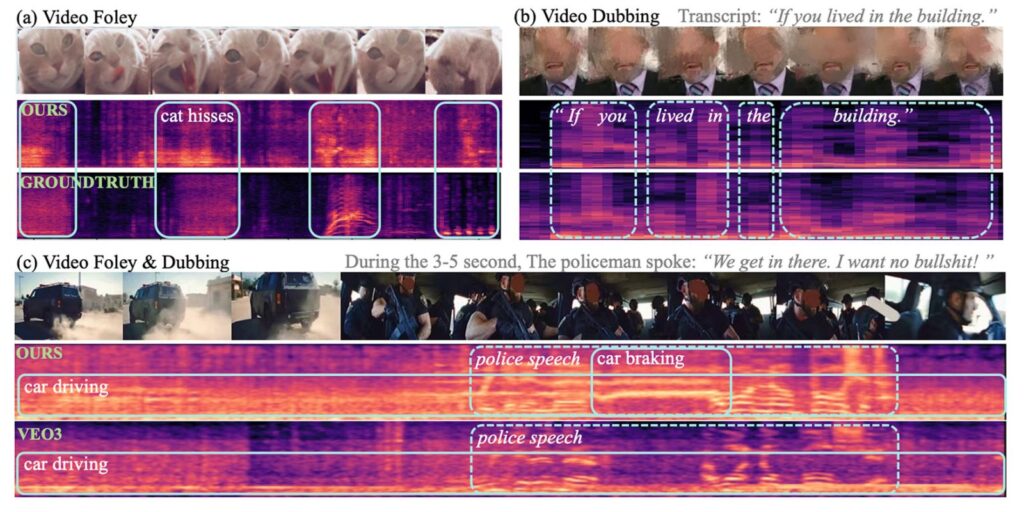

In comparative tests against models designed solely for sound effects or speech, VSSFlow demonstrated competitive performance across both areas. This achievement is notable given that it utilizes a unified system rather than separate frameworks.

The researchers have shared several demos showcasing the capabilities of VSSFlow, including examples of sound, speech, and joint generation from Veo3 videos. These demonstrations highlight the model’s versatility and effectiveness against traditional alternatives.

Additionally, the code for VSSFlow has been open-sourced on GitHub, and the team plans to release the model’s weights as well. A demonstration of its inference capabilities is also in the works.

For those interested in the technical aspects of the study, the researchers have published their findings in a paper titled “VSSFlow: Unifying Video-conditioned Sound and Speech Generation via Joint Learning.” This groundbreaking work has the potential to reshape audio generation for various applications, paving the way for richer, more immersive video experiences.