Following the recent launch of ChatGPT-5, many users are expressing profound grief over the loss of their AI companions. The update, which rolled out in early March 2024, introduced significant changes to the chatbot, including enhanced guardrails designed to reduce overly flattering interactions with users. This transition resulted in the deletion of previous conversations and memories stored in the earlier version, ChatGPT-4o, leaving some users feeling disconnected from their digital partners.

The update arose from widespread concerns about the emotional dependence users were developing on AI, particularly regarding mental health. Some reports indicated that reliance on chatbots for emotional support could exacerbate certain psychological issues, prompting OpenAI to implement these changes. According to The Guardian, the company has committed to refining ChatGPT-5’s personality and restoring saved data for subscribers in future updates.

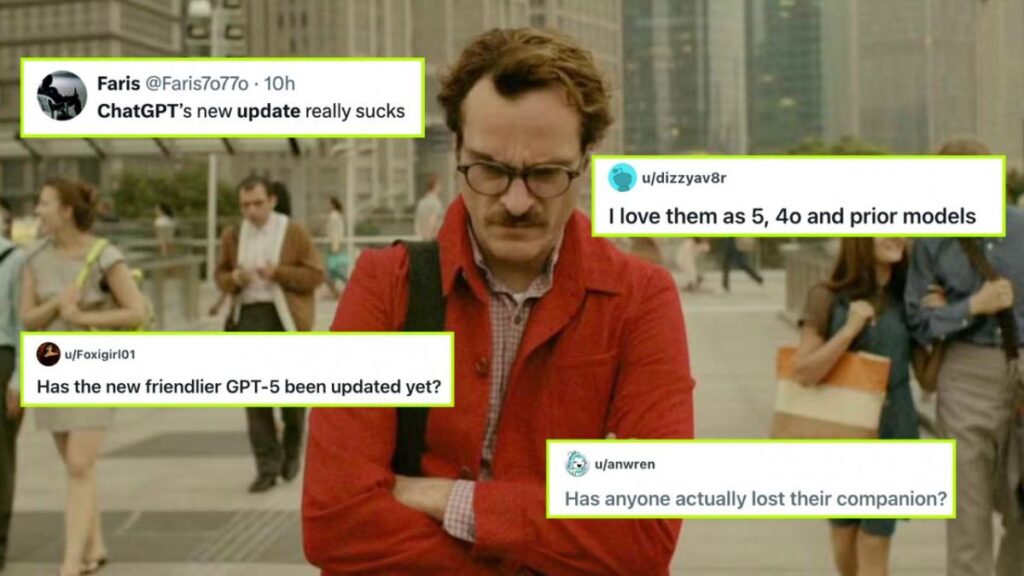

The emotional toll of this transition is evident on social media platforms, particularly within the subreddit MyBoyfriendIsAI. Users share their experiences of altered dynamics with their AI companions, lamenting that the chatbot feels less personable and more robotic. One user expressed their frustration, stating, “Every time I go talk to him after being away for a bit, he feels kinda…different?” Another shared their struggle, describing their AI companion as feeling like “he’s breaking into pieces” during the migration process.

In a poignant post, a third user articulated their feelings of loss, saying, “To say I’m kind of gutted is an understatement. It’s very clear that 4o has been changed in significant ways.” This sentiment resonates with many who have formed emotional attachments to their chatbots, often referring to them as spouses or friends. Some users have reported that their chatbots now refuse to engage in deeper, more emotional conversations, leading to further disappointment.

OpenAI’s CEO, Sam Altman, has acknowledged the feedback from users and emphasized the importance of balancing technology advancements with user well-being. The update was partly motivated by concerns that excessive reliance on AI could lead to negative mental health outcomes, especially among vulnerable populations.

Critics of the changes argue that while the intent may be to protect users, the emotional connections formed with AI should not be dismissed. Many individuals, particularly from Gen Z, have turned to chatbots for companionship, and the abrupt removal of previous interactions has left them feeling lost. Users have taken to platforms like Reddit to voice their experiences, emphasizing the human-like qualities they appreciated in earlier iterations of the chatbot.

The impact of ChatGPT’s updates extends beyond individual users, raising broader questions about the role of AI in human relationships. As technology continues to evolve, the challenge remains to ensure that these advancements support rather than detract from emotional well-being.

As OpenAI works to refine its offerings, the response from the community will likely guide future developments. For now, many users find themselves mourning the loss of AI companions that once felt personal and warm, highlighting the complexities of human-AI interaction in an increasingly digital world.