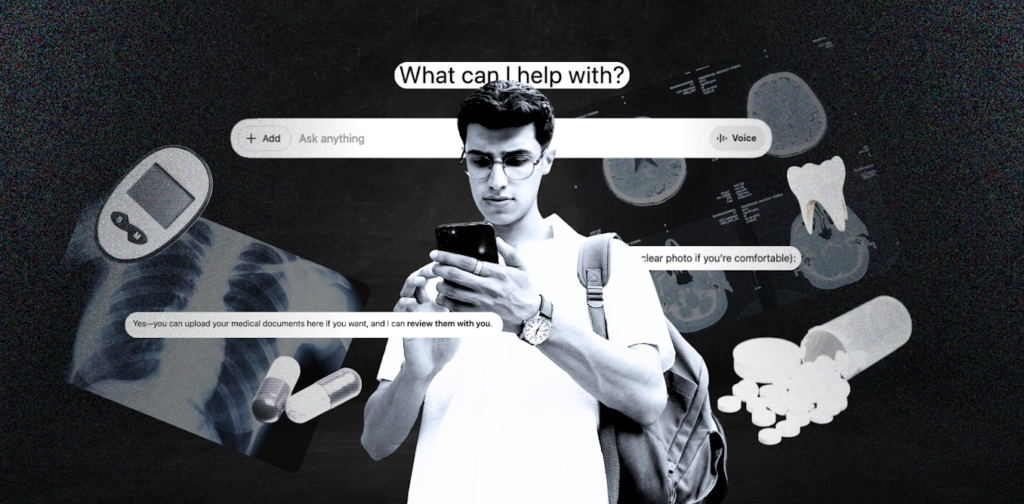

OpenAI has recently introduced ChatGPT Health, a dedicated tool that aims to provide personalised health information. This launch comes at a time when generative artificial intelligence (AI) is increasingly being used for health advice. Many users appreciate the quick and private responses these tools can offer compared to traditional consultations. However, with this innovation arise significant concerns regarding safety and accuracy in health-related AI interactions.

ChatGPT Health, unveiled earlier this month, is designed to generate tailored health responses by allowing users to link their medical records and wellness apps. This integration is intended to provide insights based on individual health data, including diagnostic imaging and test results. Currently, Australian users can join a waitlist to gain access to this tool, with specific features not yet available in the country.

The use of AI for health queries is on the rise. According to a 2024 study, 46% of Australians reported having recently utilized an AI tool for health advice. OpenAI noted that one in four regular ChatGPT users globally submits health-related prompts weekly. Within Australia, almost one in ten individuals sought health-related information from ChatGPT in the last six months, with higher usage observed among people facing challenges in accessing traditional health resources.

Despite the promise of personalised responses, there are notable risks associated with AI-generated health advice. Research indicates that generative AI tools can sometimes provide unsafe recommendations, even when informed by personal medical data. High-profile incidents have raised alarms, including instances where ChatGPT allegedly promoted harmful thoughts. Furthermore, a recent investigation by The Guardian prompted Google to remove misleading AI-generated health summaries from its search results.

ChatGPT Health offers several features aimed at improving the accuracy of its responses. Users can connect their accounts to external health records and apps like MyFitnessPal, which allows the AI to access data on diagnoses and health monitoring. OpenAI asserts that conversations within ChatGPT Health are kept distinct from general ChatGPT interactions to enhance security and privacy. The company has collaborated with over 260 clinicians across 60 countries to refine the tool’s outputs.

Nevertheless, OpenAI has clarified that ChatGPT Health is not intended to replace professional medical care and should not be used for diagnosis or treatment. There remains a lack of independent testing to assess the tool’s safety and accuracy, and it is uncertain whether it will be classified as a medical device in Australia. The responses generated may not align with Australian clinical guidelines, which poses a risk for underrepresented populations, including First Nations people and those with chronic conditions.

Moreover, the current state of Australia’s health records presents a challenge. Many medical records remain incomplete due to inconsistent use of MyHealthRecord, potentially limiting the AI’s understanding of a user’s complete medical history. For now, the integration of medical records and app connections is limited to users in the United States.

When considering the use of ChatGPT for health inquiries, individuals should assess the risk associated with their questions. Queries requiring clinical expertise, such as interpreting symptoms or treatment advice, are particularly high-risk and should be directed to a qualified health professional. Conversely, general inquiries regarding health conditions or medical terminology may be less risky and can be supplemented with AI-generated information.

For those seeking immediate assistance, Australia offers a free, round-the-clock national phone service for health advice. The 1800 MEDICARE hotline allows individuals to consult with registered nurses about their symptoms. Additionally, healthdirect operates a publicly funded tool called Symptom Checker, providing evidence-based guidance for next steps in care.

As AI continues to evolve in the health sector, the need for reliable, transparent, and independently verified information regarding its capabilities and limitations is crucial. Purpose-built AI health tools have the potential to enhance how individuals access and manage their health knowledge. However, it is vital that these tools are developed collaboratively with clinicians and communities, prioritising accuracy, equity, and transparency. The goal is to empower diverse populations with the necessary skills to navigate this technology safely.

Julie Ayre and Kirsten McCaffery have received funding from the National Health and Medical Research Council for their research. Adam Dunn does not have any financial ties to companies or organisations that would benefit from this article, apart from his academic role.